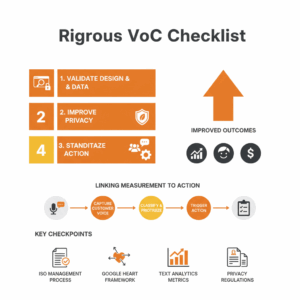

Why do executives need a rigorous VoC checklist now?

Leaders face signal loss in customer feedback. Silos fragment comments, channels multiply, and dashboards hide the root causes that drive churn and cost-to-serve. A rigorous VoC checklist gives executives a simple, testable way to validate design, data integrity, and governance before scaling. Clear checkpoints reduce rework, improve privacy posture, and align product, CX, and operations around a common feedback language. Strong VoC systems link measurement to action by standardizing how the enterprise captures, classifies, and prioritizes the customer voice. ISO guidance treats customer satisfaction monitoring as a defined management process, not an ad hoc activity.¹ Google’s HEART framework shows how consistent metrics connect experience signals to product outcomes.² Robust text analytics demands transparent model evaluation, including precision, recall, and F1.³ Privacy regulations require lawful bases for processing and clear retention policies.⁴ In Australia, the Privacy Act sets additional expectations for handling personal information.⁵

What is a VoC system in practical terms?

A Voice of Customer system is the end-to-end capability that collects, classifies, analyzes, and operationalizes customer feedback across surveys, conversations, reviews, and telemetry. VoC unifies structured metrics such as CSAT and NPS with unstructured signals such as call transcripts and app store reviews. Leading platforms describe this as a continuous loop that integrates capture, insight, and action.⁶ ⁷ Many organizations still oversimplify the loop by chasing a single score. Research and industry debates show that no single metric reliably predicts growth across contexts, so leaders should track a portfolio of outcome and journey metrics tailored to their model.⁸ The practical definition centers on standard inputs, well-defined taxonomies, and closed-loop workflows that create measurable change. Executives should treat VoC as a managed product with a backlog, road map, and service levels rather than a one-off survey program.

How does the VoC readiness checklist work?

Teams use the VoC readiness checklist to assess design, data, analytics, activation, and governance. The checklist organizes controls into five sections. Each section includes a clear Subject–Verb–Object lead and a pass or action note. The artifact doubles as an implementation plan and an audit trail. The structure mirrors ISO guidance on monitoring customer satisfaction and emphasizes lawful data handling and model evaluation.¹ ³ ⁴ The checklist below ships as a template that program owners can copy into their preferred work management tool. Use it as a quarterly review and as a pre-flight for new feedback sources. The evergreen goal is to move items from red to green while documenting assumptions, data lineage, and ownership. Program managers should assign each control to an accountable owner with a due date and link to evidence.

VoC Readiness Checklist Template

1. Strategy and scope

-

Define goals. Specify the decision this VoC stream must inform and the target outcome metric.

-

Map journeys. List covered journeys, channels, and customer segments with inclusion criteria.

-

Align metrics. Select a balanced set of outcome, perception, and behavior metrics.² ⁸

2. Data capture and consent

-

Document lawful basis. Record consent or other legal grounds for each data source with location and timestamp.⁴ ⁵

-

Minimize data. Capture only fields required for analysis and action.

-

Secure ingestion. Verify encryption in transit and at rest with key ownership documented.

3. Classification and taxonomy

-

Adopt the taxonomy. Apply the feedback taxonomy in this document to all sources.

-

Enable multi-labeling. Allow comments to carry multiple themes, intents, and journey stages.

-

Version the schema. Store taxonomy version, date, and change summary in metadata.

4. Analytics and model quality

-

Define evaluation. Report precision, recall, and F1 for each classifier at the theme level.³

-

Calibrate sentiment. Validate sentiment scores against human benchmarks for target channels.

-

Track bias. Run error analysis by segment and channel with corrective actions.

5. Activation, governance, and improvement

-

Close the loop. Route themes to owners with SLAs and playbooks.

-

Publish changes. Log fixes, releases, and customer-visible improvements with dates.

-

Review governance. Run quarterly reviews with Legal, Security, and Data leaders.

What taxonomy makes feedback analysis consistent across channels?

Executives need a consistent taxonomy to turn noisy comments into repeatable, comparable insights. A feedback taxonomy is the controlled vocabulary and structure that expresses customer themes, intents, and outcomes. The taxonomy must be channel-agnostic, multi-label, and versioned. Braun and Clarke’s guidance on thematic analysis reinforces the need for explicit, transparent coding frameworks to ensure reliability and validity in qualitative work.⁹ Enterprises should align taxonomy layers to journey and product structures to connect insights to action. Leading VoC platforms promote structured hierarchies because consistent tagging improves trend analysis, route-to-owner automation, and root cause discovery.⁶ ⁷ A good taxonomy balances stability with adaptability. The core categories should change slowly. The subthemes should evolve with product and policy changes. Versioning preserves comparability across time.

Feedback Taxonomy Template

Unit of analysis: One customer verbatim, call segment, chat turn, case note, or review entry.

Label structure:

-

Category: The top-level area of experience such as Product, Pricing, Policy, People, Process, or Platform.

-

Theme: The specific topic within the category such as Onboarding, Billing, Returns, Outage, Accessibility, or Security.

-

Subtheme: The granular facet such as Password Reset, Multi-factor Login, Direct Debit Error, or Refund Delay.

-

Intent: The customer purpose such as Inform, Request, Complain, Escalate, or Praise.

-

Sentiment: The expressed valence such as Negative, Neutral, Positive, with confidence.

-

Journey Stage: The lifecycle point such as Discover, Buy, Start, Use, Get Help, Renew, or Leave.

-

Channel: The source such as Phone, Chat, Email, App Review, Social, Survey, or Web Form.

-

Product or Service: The affected SKU, plan, or service line.

-

Impact Tag: The business impact such as Churn Risk, Compliance Risk, Cost to Serve, Revenue Opportunity, or Vulnerable Customer.

Metadata fields: Source, Timestamp, Language, Customer ID (if permitted), Taxonomy Version, Classifier Version, Rater ID.

How should teams apply the taxonomy to real data?

Leaders create consistency by combining human coding with machine assistance. Teams define gold-standard labeled sets that cover high-volume themes, then train classifiers and evaluate using precision, recall, and F1.³ Analysts maintain a living codebook with examples, edge cases, and disambiguation rules. Product and operations owners refine subthemes when release notes show new failure modes. Reviewers sample classifications weekly to validate quality and to spot drift. CRISP-DM remains a practical lifecycle for this work. It asks teams to define business objectives, understand data, prepare data, model, evaluate, and deploy, then loop.¹⁰ HEART and journey metrics keep the analysis anchored to outcomes that matter.² A strong governance rhythm protects privacy and preserves comparability as the taxonomy evolves. Documentation that includes change logs and version fields ensures year-over-year trend integrity.

Where do surveys, transcripts, and reviews fit in the pipeline?

Executives should treat each source as a structured feed that lands in a common schema. Surveys deliver scored items and verbatims from targeted samples. Contact center transcripts deliver turn-by-turn language with acoustic or sentiment metadata. Public reviews deliver candid narratives and star ratings at scale. Each source must include consent and retention fields to comply with GDPR Article 6 and local law.⁴ ⁵ Each source must map to the taxonomy and carry a lineage field that records capture method and preprocessing steps. Speech-to-text outputs should record version and provider to support reprocessing if accuracy improves. Leading VoC platforms support this multi-source design and provide connectors, APIs, and action workflows.⁶ ⁷ A unified repository unlocks cross-channel comparisons, faster root cause analysis, and automated routing to owners with defined service levels.

How do we measure impact, not just insight?

Executives should ask for closed-loop metrics that link themes to action and action to outcomes. Teams define owner SLAs for high-impact themes, such as a 14-day fix plan for Churn Risk issues. Analysts track pre- and post-change trends for the targeted theme while controlling for seasonality. Product managers connect taxonomy items to defect or feature IDs so change logs tie back to customer voice. Leaders should monitor both experience metrics and business outcomes to avoid metric myopia. Research shows that single-score approaches such as NPS do not consistently predict growth, which is why multi-metric portfolios perform better.⁸ HEART adds texture by balancing Happiness, Engagement, Adoption, Retention, and Task Success.² Model metrics such as F1 ensure the classification layer remains trustworthy as volumes scale.³ Governance ensures privacy and compliance as the program matures under changing regulation.⁴ ⁵

Which templates can teams use today?

Teams can start fast with two deliverables. The VoC readiness checklist validates the operating model before scale. The feedback taxonomy template standardizes classification across sources. The combination reduces rework and increases trust. ISO 10004 provides a management lens for monitoring and measuring customer satisfaction.¹ Google’s HEART framework supplies an outcome-oriented view for product and service ecosystems.² Scikit-learn’s definitions keep model evaluation unambiguous in documentation.³ GDPR and the Australian Privacy Act set clear expectations for lawful processing and data handling.⁴ ⁵ Medallia and Qualtrics provide reference architectures and practical playbooks for multi-source capture and closed-loop action.⁶ ⁷ Leaders who adopt these templates create a shared language, improve decision speed, and ship changes that customers notice.

FAQ

What is a Voice of Customer system and why does it matter to CustomerScience.com.au clients?

A Voice of Customer system is a managed capability that collects, classifies, analyzes, and operationalizes feedback across surveys, service interactions, and public reviews. It aligns Customer Science clients on shared taxonomies, governance, and closed-loop action so insights lead to measurable change.⁶ ⁷

How should we structure a feedback taxonomy for omnichannel operations?

Use a layered schema that includes Category, Theme, Subtheme, Intent, Sentiment, Journey Stage, Channel, Product or Service, and Impact Tag. Make it multi-label, versioned, and channel-agnostic to preserve comparability over time.⁹

Which metrics should executives track beyond NPS or CSAT?

Track a portfolio that pairs experience metrics with business outcomes. Combine HEART elements such as Adoption and Task Success with churn, revenue, and cost-to-serve. Avoid single-score dependence because growth prediction varies by context.² ⁸

How do we evaluate text classification models used in VoC analytics?

Report precision, recall, and F1 at the theme level. Maintain gold-standard labeled sets, run periodic error analysis by segment and channel, and document classifier and taxonomy versions in metadata.³

Which privacy rules govern VoC data in Australia?

Ensure a lawful basis for processing under GDPR where relevant and comply with the Australian Privacy Act and OAIC guidance, including consent capture, minimization, and retention controls.⁴ ⁵

Who should own closed-loop action from VoC insights?

Assign each high-impact theme to a product or operations owner with a service level to diagnose, fix, and publish customer-visible improvements with dates and links to change records.¹

Which platforms support multi-source VoC programs used by Customer Science?

Platforms such as Medallia and Qualtrics provide connectors, analytics, and workflow capabilities that support survey, transcript, and review ingestion with closed-loop routing to owners.⁶ ⁷

Sources

-

ISO 10004: Quality management. Customer satisfaction. Guidelines for monitoring and measuring. International Organization for Standardization. 2018. ISO. https://www.iso.org/standard/70332.html

-

Measuring the User Experience on a Large Scale: User-Centered Metrics for Web Applications. Rodden, Hutchinson, Fu. 2010. CHI/Google Research. https://research.google/pubs/pub36299/

-

Model Evaluation: Classification Metrics. scikit-learn documentation. 2024. scikit-learn. https://scikit-learn.org/stable/modules/model_evaluation.html#classification-metrics

-

GDPR Article 6: Lawfulness of processing. European Union GDPR Portal. 2018. EU. https://gdpr.eu/article-6-lawfulness-of-processing/

-

The Privacy Act. Office of the Australian Information Commissioner. 2025. OAIC. https://www.oaic.gov.au/privacy/the-privacy-act

-

What is Voice of the Customer (VoC)? Qualtrics XM Institute. 2024. Qualtrics. https://www.qualtrics.com/experience-management/customer/voice-of-the-customer/

-

What is Voice of the Customer? Medallia Resource Center. 2024. Medallia. https://www.medallia.com/resource/what-is-voice-of-the-customer/

-

The One Number You Need to Grow. Reichheld. 2003. Harvard Business Review. https://hbr.org/2003/12/the-one-number-you-need-to-grow

-

Using Thematic Analysis in Psychology. Braun, Clarke. 2006. Qualitative Research in Psychology. https://www.tandfonline.com/doi/abs/10.1191/1478088706qp063oa

-

CRISP-DM 1.0 Step-by-step Data Mining Guide. Chapman et al. 2000. The Modeling Agency. https://the-modeling-agency.com/crisp-dm.pdf