Why should service leaders shift from opinion to evidence?

Executives face decision risk when ideas move from research to concept without real validation. Leaders invest in features, channels, and service models that customers never adopt. Experiment-driven design changes this pattern. Teams frame assumptions as hypotheses, test them with real users, and let evidence guide scope and sequencing. This discipline reduces waste, increases learning speed, and aligns stakeholders around measurable outcomes. The method fits complex service environments where processes, people, and platforms must align for value to appear. It treats every concept as a set of testable bets. It rewards teams that learn early, not teams that launch late. It gives Customer Experience leaders a way to convert insights into decisions that stand up in boardrooms and call floors.¹

What is experiment-driven design in customer experience?

Experiment-driven design is a structured approach that uses rapid, low-cost experiments to validate desirability, feasibility, and viability before full design and build. In practice, a team writes hypotheses, defines success metrics, and runs controlled tests with customers in natural contexts. The approach blends design thinking for empathy, pretotyping for speed, and online controlled experiments for statistical confidence. Hypotheses anchor every move. Evidence shapes every decision. Concepts earn their way forward by demonstrating traction with real customers. This unit keeps teams honest about uncertainty. It reduces the gap between a promising insight and an investable concept. It builds a habit of learning that scales across products, channels, and services.² ³

Where do the best hypotheses come from?

Strong hypotheses come from explicit problem framing. Teams start with a customer job-to-be-done, which is a stable definition of the progress a customer seeks in a given situation. Jobs clarify desired outcomes and tradeoffs. They cut through solution bias and feature noise. When a customer hires a service to do a job, the metric that matters is outcome improvement, not feature count. A clear job statement leads to sharper assumptions about value, willingness to pay, and switching triggers. That clarity improves test design. It focuses teams on the smallest behavior change that would prove value. It also aligns stakeholders on what success looks like long before any build begins.⁴

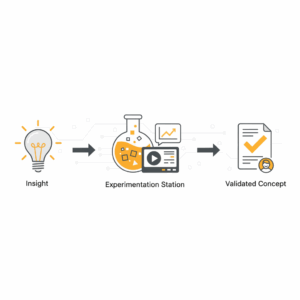

How do we translate insight into a testable concept?

Teams translate insights into testable concepts by decomposing the idea into value hypotheses and growth hypotheses. Value hypotheses cover who benefits, what moment matters, and why the new behavior beats the current workaround. Growth hypotheses cover how people will discover, adopt, and repeat the service. Each hypothesis becomes a test card: premise, method, metric, and threshold. The structure forces debate on evidence quality. A concierge prototype, a clickable flow, or a service script can all produce early signals. The mechanism does not need to be beautiful. It needs to be believable. Strong teams choose the lightest method that can invalidate a risky assumption.⁵

Which experiment types fit service innovation?

Service leaders pick experiment types that suit channel realities and risk. Several patterns work well:

Pretotypes simulate value with minimal build. A landing page with a waitlist measures demand. A manual back office simulates automation. A marked-up script tests a new call flow in one queue before rollout. Pretotypes create believable experiences without full systems.²

Wizard-of-Oz and concierge trials use human effort to deliver the experience behind the scenes. They generate qualitative insight and real conversion data. Teams learn where automation will pay back.⁵

Online controlled experiments run A/B or multivariate tests on digital touchpoints. They quantify effect sizes, detect regressions, and build a durable evidence base for decision rights.³ ⁶

Conjoint or discrete choice models estimate value tradeoffs when live testing is impractical. Teams size willingness to pay and feature priorities before committing to scope.⁷

Service blueprint pilots stage a new service moment in one store, region, or queue. They expose process and policy risks early and provide operational learning that analytics alone cannot reveal.⁸

How do we protect validity without slowing speed?

Teams protect validity by matching the test to the decision. Not every choice needs a full-scale online experiment. Some choices need signal, not significance. Others require controlled experiments with guardrails, pre-registered metrics, and clear power calculations. Leaders set a portfolio cadence. They reserve the heavy instruments for decisions with irreversible cost or high customer risk. They publish a taxonomy of test levels, from discovery probes to confirmatory trials. They also define minimum evidence for funding gates. This governance reduces debate and accelerates teams. Quality improves because method choice becomes explicit and consistent.³ ⁶

What changes in the operating model?

Experiment-driven design changes roles, rituals, and tooling. Product owners frame hypotheses and secure small budgets for probes. Researchers own problem clarity and bias control. Designers craft pretotypes that feel real enough to test behavior. Data scientists define metrics, sampling, and guardrails. Operations leaders sponsor blueprint pilots and change management. The group ships weekly learning, not quarterly presentations. The cadence includes test reviews, kill decisions, and scaled rollouts. A central experimentation platform logs hypotheses, variants, and outcomes so learning compounds. Over time, the culture celebrates retired ideas as wins because they saved money and time.³

How do we measure value beyond conversion lifts?

Executives measure value across four lenses. Learning velocity tracks hypotheses tested per quarter and time to decision. Risk retired tracks the percentage of high-uncertainty assumptions invalidated at low cost. Customer outcome movement tracks changes in effort, resolution, and satisfaction at key moments. Financial impact tracks revenue, margin, and cost-to-serve from scaled variants. Teams also monitor experiment quality, including sample ratio mismatch checks, guardrail breaches, and false positive rates. These metrics reward disciplined learning and protect customers from unintended harm. They keep concept work accountable to business outcomes, not just creative output.³ ⁶

How does co-creation improve experiment quality?

Co-creation pulls customers and frontline staff into design and test cycles. Customers help shape value propositions, weigh tradeoffs, and design real-world scenarios. Frontline teams surface operational edge cases and policy constraints that lab tests miss. Co-creation does not mean consensus design. It means structured participation with clear decision rights and evidence thresholds. The result is higher signal quality, less rework, and stronger adoption because people see their fingerprints on the solution. Co-creation shines in services where trust, timing, and handoffs define value more than interfaces do. It converts relationship capital into design advantage.¹ ⁹

What does a practical roadmap look like?

Leaders can start with a focused, 12-week program that moves one opportunity from insight to concept:

Weeks 1 to 2. Frame the job and map the moment. Define the job-to-be-done, target segments, and the high-friction service moment. Produce a service blueprint with pain, policy, and data touchpoints.⁸

Weeks 3 to 4. Write hypotheses and choose methods. Break the concept into value and growth hypotheses. Select pretotypes, concierge trials, or online experiments based on risk and channel.² ³

Weeks 5 to 8. Run probes and refine. Execute rapid tests, record evidence in the platform, and prune the backlog. Kill weak variants quickly. Improve promising ones with targeted research.³

Weeks 9 to 10. Confirm and size. Use controlled experiments or conjoint to size effect and economics. Validate operational load with a blueprint pilot in one channel.⁶ ⁷ ⁸

Weeks 11 to 12. Decide and scale. Present a concept with validated evidence, risk profile, and rollout plan. Secure investment only for variants that earned it.

This roadmap creates a repeatable path from customer insight to fundable concept with clear governance and measurable outcomes.¹ ³

How do we embed this capability at enterprise scale?

Enterprises institutionalize experiment-driven design by codifying standards, building a common platform, and training practitioners. Standards define test levels, ethics, data privacy, and kill criteria. A shared platform captures hypotheses, results, and learnings across teams. Training focuses on hypothesis writing, pretotyping craft, experiment design, and service blueprinting. Leaders protect time for weekly test reviews and fund a central enablement unit that supports pilots. Vendors and partners integrate into the cadence, not the other way around. Over time, the enterprise builds a library of reusable patterns and guardrails that accelerate future concepts. This is how the practice outlasts the project.³ ⁸

What impact should executives expect in the first year?

Executives should expect faster cycle times, fewer large failed launches, and clearer links between customer outcomes and commercial results. Portfolio data should show more small bets, shorter time to pivot or persevere, and higher win rates for scaled rollouts. Customer metrics should improve at targeted service moments, not just in aggregate. Teams should retire more ideas earlier and redeploy budget to proven variants. The operating model should feel lighter because decisions trace to evidence, not hierarchy. Culture should shift toward curiosity, respect for the customer, and pride in disciplined craft. These are the visible markers of experiment-driven design maturity.¹ ³

Call to action for Customer Experience and Service leaders

Leaders set the tone. Sponsor one concept and demand hypotheses, tests, and clear gates. Ask for a service blueprint that shows the real work. Reward teams for what they learned, not just what they launched. Build the platform and the practice. Make co-creation a habit, not an event. When evidence leads, concepts earn trust and investment. When concepts earn trust, transformation moves faster with less risk. That is how organizations turn insight into impact with confidence.¹ ²

FAQ

What is experiment-driven design in Customer Experience and Service Transformation?

Experiment-driven design is a method that uses rapid experiments to validate desirability, feasibility, and viability before full build. Teams turn insights into hypotheses, run pretotypes or controlled tests, and scale only what earns evidence.² ³

How does co-creation with customers and frontline teams improve service concepts?

Co-creation brings customers and staff into design and testing. It increases signal quality, reveals operational realities, and improves adoption by giving stakeholders a hand in shaping the solution.¹ ⁹

Which experiment types work best for service innovation at scale?

Pretotypes and concierge trials test value quickly. Online controlled experiments quantify impact on digital touchpoints. Conjoint analysis sizes tradeoffs when live testing is hard. Service blueprint pilots validate operations in one region or queue.² ³ ⁷ ⁸

Why should executives prioritize a centralized experimentation platform?

A shared platform logs hypotheses, variants, metrics, and outcomes. It preserves learning, prevents duplicate work, and enforces guardrails across products and channels. This creates compound value over time.³ ⁶

What metrics show progress in experiment-driven design?

Track learning velocity, risk retired, outcome movement at key service moments, and financial impact from scaled variants. Monitor experiment quality with guardrail metrics and sampling checks to protect validity.³ ⁶

Which frameworks help craft strong hypotheses from customer insights?

Jobs-to-be-done provides stable problem framing. Service blueprinting maps people, process, policy, and tech at the moment of truth. Together, they sharpen value and growth hypotheses for testing.⁴ ⁸

How can Customer Science support organizations on customerscience.com.au?

Customer Science helps leaders frame jobs, design service blueprints, set up experimentation platforms, and run pretotypes, pilots, and controlled experiments. The team builds capability while delivering validated concepts that reduce risk and accelerate transformation.¹ ² ³

Sources

Eric Ries. 2011. The Lean Startup. Crown Business. https://theleanstartup.com/

Alberto Savoia. 2011. Pretotype It. Self-published. https://www.pretotyping.org/

Ron Kohavi, Diane Tang, Ya Xu. 2020. Trustworthy Online Controlled Experiments. Cambridge University Press. https://experimentguide.com/

Clayton M. Christensen, Taddy Hall, Karen Dillon, David S. Duncan. 2016. Competing Against Luck. Harper Business. https://www.hbs.edu/faculty/Pages/item.aspx?num=51421

Stanford d.school. 2018. Design Thinking Bootleg. Hasso Plattner Institute of Design at Stanford. https://dschool.stanford.edu/resources/design-thinking-bootleg

Ronny Kohavi, Alex Deng, Brian Frasca, et al. 2014. Online Controlled Experiments at Large Scale. KDD. https://exp-platform.com/

Sawtooth Software. 2021. Conjoint Analysis: A Guide for Designing and Analyzing Choice Experiments. Sawtooth Software. https://sawtoothsoftware.com/resources/conjoint-analysis

Mary Jo Bitner, Amy L. Ostrom, Felicia N. Morgan. 2008. Service Blueprinting. Journal of Service Research. https://wpcarey.asu.edu/sites/default/files/service_blueprinting_2008.pdf

C. K. Prahalad, Venkat Ramaswamy. 2004. Co-creation experiences: The next practice in value creation. Journal of Interactive Marketing. https://deepdyve.com/lp/wiley/co-creation-experiences-the-next-practice-in-value-creation-3sQwY8Q8yP (abstract)