Why do service leaders need disciplined experiment design now?

Executives face volatile customer expectations and rising operational complexity. Teams deploy features, scripts, and policies quickly, yet many changes underperform in production. Leaders reduce that risk when they treat each change as a falsifiable hypothesis and evaluate it through controlled experiments. Well-designed experiments separate signal from noise, quantify causal impact, and create a reusable knowledge base for future decisions. Online controlled experiments, often called A/B tests, provide the most reliable way to estimate causal effects in digital and service journeys when randomization and guardrails are in place.¹

What is a service experiment in plain terms?

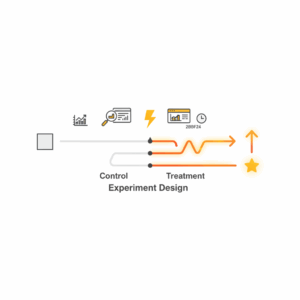

Teams run a service experiment when they randomize customers, interactions, or agents into comparable groups to test a change and measure outcomes. A control group experiences the current process. A treatment group experiences the proposed variant. Random assignment balances unobserved factors, so outcome differences can be attributed to the change with known uncertainty. This structure applies across channels. Contact centres test call routing rules. Field services test schedule notifications. Digital self-service tests copy, layout, and policy prompts. Trustworthy experiments start with a clear hypothesis, pre-specified metrics, and a decision rule that defines what success means.²

How do we frame the problem so the experiment answers the right question?

Leaders start by translating a business objective into an explicit causal question. For example, “Does proactive outage messaging reduce avoidable calls without harming satisfaction?” The team defines a minimum detectable effect that is meaningful in the operation, not just statistically significant. The team sets primary metrics that reflect the objective, such as customer satisfaction, first contact resolution, or average handling time, and establishes guardrail metrics that keep the experience safe, such as complaints and churn. Pre-registration of hypotheses and metrics reduces selective reporting and increases trust in the results.²

Where does service blueprinting strengthen experiment scope?

Teams improve experiment design when they use service blueprinting to visualise all touchpoints, actors, and backstage systems involved in the change. A blueprint identifies where randomization can occur, where contamination might leak between groups, and where to capture data without disrupting the experience. Blueprinting also reveals failure modes, such as agents sharing tips across arms or customers seeing mixed variants in omnichannel sequences. This clarity reduces bias and speeds delivery by aligning stakeholders on what will change and how to measure it.³

How do we choose units and randomization in operational contexts?

Designers choose the experimental unit that best balances power, practicality, and contamination risk. Customer-level randomization maximizes power in digital flows. Interaction-level randomization suits chat or email. Agent-level randomization helps call centres where agents might cross-pollinate tactics. Cluster randomization at site or region level reduces spillover when policy changes are visible. Stratified or blocked assignment controls for known covariates such as segment, time of day, or queue, improving precision without adding complexity. Sequential designs allow early stopping for efficacy or futility when pre-specified rules are met.²

Which metrics matter for executives and why?

Executives pick primary metrics that represent value creation. For customer experience, these include satisfaction, effort, complaint rates, and retention. For operations, these include resolution rate, average handling time, and rework. For growth, these include conversion and upsell. Many organisations use Net Promoter Score to track loyalty over time, but they should validate the link between NPS, behaviour, and economics in their context before treating it as a primary decision metric.⁴ Leaders couple these metrics with guardrails that protect service quality, ethics, and compliance.²

How do we make the experiment analysis trustworthy?

Analysts predefine the population, metrics, statistical model, and decision criteria. They compute minimum sample size based on baseline rates, variance, desired power, and effect size. They keep randomization intact and avoid peeking with ad hoc interim looks that inflate false positives. They prefer intent-to-treat estimates to reflect operational reality and use nonparametric checks when distributional assumptions fail. They correct for multiple comparisons across variants or segments. They report confidence intervals, not just p-values, and emphasise practical significance aligned to cost-to-serve and customer lifetime value. Rigorous analysis keeps results credible and repeatable.¹

What are common pitfalls in service A/B tests and how do we avoid them?

Leaders avoid biased results by protecting randomization from operational shortcuts. They prevent sample ratio mismatches, ensure uniform exposure, and avoid novelty effects by running long enough to cover demand cycles. They disable caching or sticky session behaviours that break assignment in digital flows. They handle seasonality, outages, and policy changes through blackout periods or covariate adjustment. They design for differential privacy when experiments touch sensitive data. Most importantly, they stop experiments that harm customers by monitoring guardrails in near real time with pre-specified thresholds.¹

How do we integrate qualitative insight without weakening causality?

Teams pair controlled experiments with qualitative research to explain mechanisms. Post-interaction surveys, agent debriefs, and session replays reveal why a variant works or fails. Service blueprinting and journey mapping integrate these insights back into the next hypothesis. This mixed-method loop maintains causal clarity while accelerating learning. Leaders document not only the winner, but the context and mechanism so knowledge compounds across teams.³

How do we run experiments ethically in real customer journeys?

Service organisations experiment ethically when they respect autonomy, benefit customers, and minimise harm. Leaders apply ethical review proportional to risk, obtain consent when appropriate, and restrict manipulations that would surprise reasonable customers or erode trust. They safeguard personal data and apply data protection principles such as purpose limitation and data minimisation. Many jurisdictions require strict controls for personal data processing, including lawful basis, transparency, and rights for individuals. Clear governance and audit trails preserve legitimacy while enabling innovation at scale.⁵ ⁶

How does experiment design compare to observational analytics?

Experiment design answers causal questions by construction, while observational analytics infers causality through modelling assumptions. Observational methods such as difference-in-differences and propensity scores help when randomization is impossible, but they remain vulnerable to unobserved confounding. Executives should prefer experiments for reversible service changes, then use observational approaches to triangulate where experiments are not feasible. The combined approach yields faster cycles and more robust decisions across complex service portfolios.¹

Where do “test and learn” programs create enterprise value?

Enterprises capture outsized value when they scale experimentation on a shared platform with governance. A central experimentation service standardises assignment, metrics, telemetry, and guardrails across brands and channels. This unit curates a backlog of hypotheses linked to strategy, provides templates, and trains analysts and product owners. Over time, the organisation shifts from opinion to evidence. Leaders see compounding impact as small wins stack and failed ideas fail fast and cheaply. A well-run program becomes a strategic asset for customer experience and service transformation.¹ ²

How should leaders measure impact and decide the next step?

Executives close the loop by translating experimental effects into economics. They model cost-to-serve, conversion, retention, and risk to estimate incremental value. They incorporate uncertainty with scenario bands and decision thresholds. They then choose to scale, iterate, or retire the change. Teams log decisions, rationale, and metadata into a knowledge base indexed by segment, channel, and outcome. This discipline prevents re-testing solved questions and increases reuse across the portfolio. It also builds organisational memory that outlasts staff changes.¹

What is a pragmatic rollout playbook for service experiments?

Leaders can move from aspiration to practice with a simple playbook. First, standardise hypothesis templates and pre-registration to clarify intent. Second, adopt a service blueprint to define units, touchpoints, and data capture. Third, implement a trustworthy randomization and telemetry platform with guardrails. Fourth, train cross-functional squads in power analysis, metric design, and ethical review. Fifth, publish a weekly experiment digest that shares results and celebrates learning over winning. These steps establish an operating rhythm where evidence informs every change that touches customers.¹ ² ³

FAQ

How do Customer Science and www.customerscience.com.au help leaders start experiment design in service contexts?

Customer Science helps executives define hypotheses, set trustworthy metrics, and implement platform guardrails for A/B testing across contact centre, digital, and field service channels. The team uses service blueprinting to pick the right unit of randomization and creates ethical governance aligned to data protection requirements.¹ ³ ⁶

What is the fastest way to stand up a first service A/B test?

Leaders pick a reversible change with clear value, such as proactive notifications to reduce avoidable calls. They pre-register the hypothesis and metrics, randomize customers or interactions, and monitor guardrails for harm. They run long enough to cover demand cycles and decide based on confidence intervals and practical effect sizes.¹ ²

Which metrics should executives trust for decision quality?

Executives select a single primary metric that reflects value creation in context, such as resolution rate or satisfaction, supported by guardrail metrics for complaints and churn. If using Net Promoter Score, teams validate its link to behaviour and economics before relying on it as a primary decision metric.⁴

Why are online controlled experiments more reliable than observational analytics for service changes?

Randomization balances unobserved factors and delivers causal estimates with known uncertainty. Observational methods remain useful when experiments are impossible, but they rely on assumptions that are hard to verify in operational environments.¹

Who should own experimentation across the enterprise?

A central experimentation service or platform team should own randomization, telemetry, and governance. Product owners and operations leaders should own hypotheses and decisions. This shared model scales learning while protecting customers through consistent guardrails.¹ ²

Which risks should we manage when experimenting with real customers?

Leaders manage risks related to harm, privacy, and fairness. They apply ethical review, protect personal data, and provide transparency consistent with data protection regulations. They stop experiments that breach guardrails and document decisions for audit.⁵ ⁶

How does service blueprinting reduce experiment failure rates?

Blueprinting maps touchpoints, actors, and backstage systems. It reveals contamination risks, defines data capture points, and aligns stakeholders on what changes where. This clarity improves internal validity and speeds delivery.³

Sources

Ronny Kohavi, Diane Tang, Ya Xu. 2020. Trustworthy Online Controlled Experiments: A Practical Guide to A/B Testing. Cambridge University Press. https://www.trustworthyexperiment.com

Stefan H. Thomke, Jim Manzi. 2014. The Discipline of Business Experimentation. Harvard Business Review. https://hbr.org/2014/12/the-discipline-of-business-experimentation

Mary Jo Bitner, Amy L. Ostrom, Felicia N. Morgan. 2008. Service Blueprinting: A Practical Technique for Service Innovation. Journal of Service Research. https://www.researchgate.net/publication/247757693_Service_Blueprinting_A_Practical_Technique_for_Service_Innovation

Fred Reichheld. 2003. The One Number You Need to Grow. Harvard Business Review. https://www.bain.com/insights/the-one-number-you-need-to-grow-hbr/

The National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. 1979. The Belmont Report. U.S. Department of Health & Human Services. https://www.hhs.gov/ohrp/regulations-and-policy/belmont-report/index.html

European Union. 2016. General Data Protection Regulation (GDPR). Official Journal of the European Union. https://eur-lex.europa.eu/eli/reg/2016/679/oj